Natural Language Processing with NLTK

Timothy Clark

Adapted from: pythonprogramming.net

What is NLP?

Setting up...

Installing Python & PIP

# Ubuntu Linux

sudo apt install -y python3 python3-pip

# Mac - open a terminal!Running .py files

# Windows

py main.py

# Mac/Linux

python3 main.pydef add(a, b):

print("{} + {} = {}".format(a, b, a + b))

print("Hello World!")

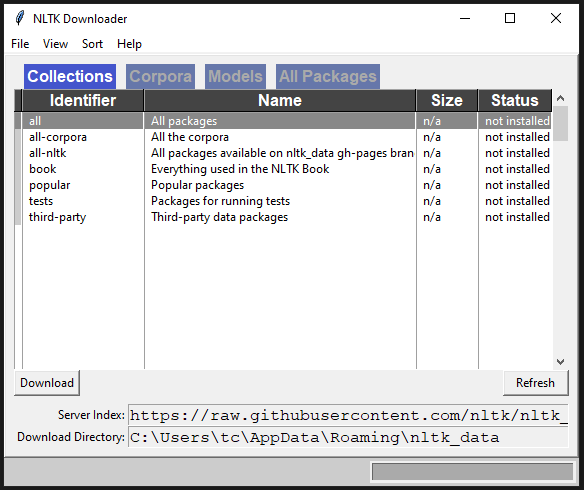

add(2, 6)import nltk

nltk.download()# https://www.nltk.org

# Windows

py -m pip install nltk

# Mac/Linux

pip install nltk

pip3 install nltk

The Basics

Tokenising

Splitting a sentence into words and sentences.

These are called tokens.

from nltk.tokenize import sent_tokenize, word_tokenize

txt = "Hello Mr. Smith, how are you doing today? The weather is great, and NLTK is awesome. The sky is kinda blue. You shouldn't eat cardboard."

sentences = sent_tokenize(txt)

print(sentences)

print()

for s in sentences:

print(s)

words = word_tokenize(s)

print(words)Stop Words

These words are essentially the ones we don't really care about - they don't change the semantic meaning of sentences

from nltk.tokenize import sent_tokenize, word_tokenize

from nltk.corpus import stopwords

sentence = "Hello Mr. Smith, how are you doing today?"

sentence = word_tokenize(sentence)

stop_words = set(stopwords.words("english"))

print(stop_words)

filtered = [w for w in sentence if not w in stop_words]

# Equivalent to:

# filtered = []

# for w in sentence:

# if w not in stop_words:

# filtered.append(w)

print(filtered)Stemming

When processing natural language, the semantic meaning often relates to the "stem" of the word, not the actual word itself

from nltk.stem import PorterStemmer, LancasterStemmer

from nltk.tokenize import word_tokenize

ps = PorterStemmer()

ls = LancasterStemmer()

words = ["walk", "walker", "walking", "walked", "walkly"]

for w in words: print(w, ps.stem(w))

# for w in words: print(w, ls.stem(w))Part of Speech Tagging

PoS tagging involves working out which bits of a sentence you are looking at

import nltk

from nltk.corpus import state_union

from nltk.tokenize import PunktSentenceTokenizer

train_text = state_union.raw("2005-GWBush.txt")

sample_text = state_union.raw("2006-GWBush.txt")

custom_sent_tokenizer = PunktSentenceTokenizer(train_text)

tokenized = custom_sent_tokenizer.tokenize(sample_text)

def process_content():

try:

for i in tokenized:

words = nltk.word_tokenize(i)

tagged = nltk.pos_tag(words)

print(tagged)

except Exception as e:

print(str(e))

process_content()

- CC coordinating conjunction

- CD cardinal digit

- DT determiner

- EX existential "there"

- FW foreign word

- IN preposition/subordinating conjunction

- JJ adjective

- JJR adjective, comparative

- JJS adjective, superlative

- LS list marker

- MD modal

- NN noun, singular

- NNS noun plural

- NNP proper noun, singular

- NNPS proper noun, plural

- PDT predeterminer

- POS possessive ending

- PRP personal pronoun

- PRP possessive pronoun

- RB adverb

- RBR adverb, comparative

- RBS adverb, superlative

- RP particle

- TO to (go "to")

- UH interjection

- VB verb, base form

- VBD verb, past tense

- VBG verb, gerund/present participle

- VBN verb, past participle

- VBP verb, sing. present, non-3d

- VBZ verb, third person sing. present

- WDT wh-determiner

- WP wh-pronoun

- WP possessive wh-pronoun

- WRB wh-abverb

Chunking

Involves extracting "chunks" of text, which consist of the parts of speech from before

import nltk

from nltk.corpus import state_union

from nltk.tokenize import PunktSentenceTokenizer

train_text = state_union.raw("2005-GWBush.txt")

sample_text = state_union.raw("2006-GWBush.txt")

custom_sent_tokenizer = PunktSentenceTokenizer(train_text)

tokenised = custom_sent_tokenizer.tokenize(sample_text)

try:

for i in tokenised:

words = nltk.word_tokenize(i)

tagged = nltk.pos_tag(words)

chunkGram = r"""Chunk: {<RB.?>*<VB.?>*<NNP>+<NN>?}""" # 0+ Any adverb, 0+ Verb, Proper Noun

chunkParser = nltk.RegexpParser(chunkGram)

chunked = chunkParser.parse(tagged)

print(chunked)

chunked.draw()

except Exception as e:

print(str(e))

Chinking

Removing something from a "chunk" that you have extracted from a body of text

import nltk

from nltk.corpus import state_union

from nltk.tokenize import PunktSentenceTokenizer

train_text = state_union.raw("2005-GWBush.txt")

sample_text = state_union.raw("2006-GWBush.txt")

custom_sent_tokenizer = PunktSentenceTokenizer(train_text)

tokenised = custom_sent_tokenizer.tokenize(sample_text)

try:

for i in tokenised[5:]:

words = nltk.word_tokenize(i)

tagged = nltk.pos_tag(words)

chunkGram = r"""Chunk: {<.*>+}

}<VB.?|IN|DT|TO>+{""" # Exclude these

chunkParser = nltk.RegexpParser(chunkGram)

chunked = chunkParser.parse(tagged)

chunked.draw()

except Exception as e:

print(str(e))

Named Entity Recognition

Recognising "entities" in the text we're analysing

import nltk

from nltk.corpus import state_union

from nltk.tokenize import PunktSentenceTokenizer

train_text = state_union.raw("2005-GWBush.txt")

sample_text = state_union.raw("2006-GWBush.txt")

custom_sent_tokenizer = PunktSentenceTokenizer(train_text)

tokenized = custom_sent_tokenizer.tokenize(sample_text)

def process_content():

try:

for i in tokenized[5:]:

words = nltk.word_tokenize(i)

tagged = nltk.pos_tag(words)

# namedEnt = nltk.ne_chunk(tagged)

namedEnt = nltk.ne_chunk(tagged, binary=True) # Treat all as named entity

namedEnt.draw()

except Exception as e:

print(str(e))

process_content()

Entities Recognised

- ORGANIZATION Georgia-Pacific Corp., WHO

- PERSON Eddy Bonte, President Obama

- LOCATION Murray River, Mount Everest

- DATE June, 2008-06-29

- TIME two fifty a m, 1:30 p.m.

- MONEY 175 million Canadian Dollars, GBP 10.40

- PERCENT twenty pct, 18.75 %

- FACILITY Washington Monument, Stonehenge

- GPE South East Asia, Midlothian

NLTK Corpora

Let's read the Bible!

from nltk.corpus import gutenberg

from nltk.tokenize import sent_tokenize

text = gutenberg.raw("bible-kjv.txt")

tokens = sent_tokenize(text)

print(tokens[5:15])

# Check out the corpus! - nltk.download()

WordNet

Basic Usage

from nltk.corpus import wordnet

syns = wordnet.synsets("program")

# Synset

print(syns[0].name())

# Word Only

print(syns[0].lemmas()[0].name())

# Definition

print(syns[0].definition())

# Examples

print(syns[0].examples())

# Synonyms & Antonyms

synonyms = []

antonyms = []

for syn in wordnet.synsets("good"):

for l in syn.lemmas():

# print("l: ", l)

synonyms.append(l.name())

if (l.antonyms()):

antonyms.append(l.antonyms()[0].name())

print(set(synonyms))

print(set(antonyms))

# Similarity

w1 = wordnet.synset("ship.n.01")

w2 = wordnet.synset("boat.n.01")

print(w1.wup_similarity(w2))

w1 = wordnet.synset("ship.n.01")

w2 = wordnet.synset("car.n.01")

print(w1.wup_similarity(w2))

w1 = wordnet.synset("ship.n.01")

w2 = wordnet.synset("cat.n.01")

print(w1.wup_similarity(w2))

w1 = wordnet.synset("ship.n.01")

w2 = wordnet.synset("cactus.n.01")

print(w1.wup_similarity(w2))

Lemmatising

from nltk.stem import WordNetLemmatizer

lemmatizer = WordNetLemmatizer()

print(lemmatizer.lemmatize("cats"))

print(lemmatizer.lemmatize("cacti"))

print(lemmatizer.lemmatize("geese"))

print(lemmatizer.lemmatize("rocks"))

print(lemmatizer.lemmatize("python"))

print()

print(lemmatizer.lemmatize("better")) # Default pos is NOUN

print(lemmatizer.lemmatize("better", pos="a"))

print(lemmatizer.lemmatize("best", pos="a"))

print(lemmatizer.lemmatize("run"))

print(lemmatizer.lemmatize("run", pos="v"))

Classifiers

What is classification?

Naïve Bayes

Naïve Bayes

import nltk

import random

from nltk.corpus import movie_reviews

# One Liner

documents = [(list(movie_reviews.words(fileid)), category)

for category in movie_reviews.categories()

for fileid in movie_reviews.fileids(category)]

random.shuffle(documents)

all_words = []

for w in movie_reviews.words():

all_words.append(w.lower()) # Convert to lowercase

all_words = nltk.FreqDist(all_words) # NLTK Frequency Distribution

word_features = list(all_words.keys())[:3000] # Top 3,000 words

def find_features(document):

words = set(document)

features = {}

for w in word_features:

features[w] = (w in words) # Boolean

return features

# print(find_features(movie_reviews.words("neg/cv000_29416.txt")))

featuresets = [(find_features(rev), category) for (rev, category) in documents]

training_set = featuresets[:1900] # First 1900

testing_set = featuresets[1900:] # Post 1900

# Naive Bayes Algorithm

# Posterior = Prior Occurances x Likelihood / Evidence

classifier = nltk.NaiveBayesClassifier.train(training_set)

print("Naive Bayes Accuracy: {:f}%".format((nltk.classify.accuracy(classifier, testing_set))*100))

classifier.show_most_informative_features(15)

# Word Ratios (Found more in pos or neg)

Naïve Bayes

import nltk

# Data Sets

training_set = data[:1900]

testing_set = data[1900:]

classifier = nltk.NaiveBayesClassifier.train(training_set)Pickle!

import nltk

# Data Sets

training_set = data[:1900]

testing_set = data[1900:]

classifier = nltk.NaiveBayesClassifier.train(training_set)SciKit Learn

import nltk

import random

from nltk.corpus import movie_reviews

import pickle

from sklearn.naive_bayes import MultinomialNB, GaussianNB, BernoulliNB

from sklearn.linear_model import LogisticRegression, SGDClassifier

from sklearn.svm import SVC, LinearSVC, NuSVC

from nltk.classify.scikitlearn import SklearnClassifier

# One Liner

documents = [(list(movie_reviews.words(fileid)), category)

for category in movie_reviews.categories()

for fileid in movie_reviews.fileids(category)]

random.shuffle(documents)

all_words = []

for w in movie_reviews.words():

all_words.append(w.lower()) # Convert to lowercase

all_words = nltk.FreqDist(all_words) # NLTK Frequency Distribution

word_features = list(all_words.keys())[:3000] # Top 3,000 words

def find_features(document):

words = set(document)

features = {}

for w in word_features:

features[w] = (w in words) # Boolean

return features

# print(find_features(movie_reviews.words("neg/cv000_29416.txt")))

featuresets = [(find_features(rev), category) for (rev, category) in documents]

training_set = featuresets[:1900] # First 1900

testing_set = featuresets[1900:] # Post 1900

# Naive Bayes Algorithm

# Posterior = Prior Occurances x Likelihood / Evidence

classifier = nltk.NaiveBayesClassifier.train(training_set)

# Open Pickle Classifier

classifier_f = open("naivebayes.pickle", "rb")

classifier = pickle.load(classifier_f)

classifier_f.close()

print("ORIGINAL Naive Bayes Accuracy: {:.2f}%".format((nltk.classify.accuracy(classifier, testing_set))*100))

classifier.show_most_informative_features(15)

# Word Ratios (Found more in pos or neg)

MNB_classifier = SklearnClassifier(MultinomialNB()) # Wrapper converts to NLTK classifier

MNB_classifier = MNB_classifier.train(training_set)

print("MNB_classifier Accuracy: {:.2f}%".format(nltk.classify.accuracy(MNB_classifier, testing_set)*100))

BernoulliNB_classifier = SklearnClassifier(BernoulliNB())

BernoulliNB_classifier = BernoulliNB_classifier.train(training_set)

print("BernoulliNB_classifier Accuracy: {:.2f}%".format(nltk.classify.accuracy(BernoulliNB_classifier, testing_set)*100))

LogisticRegression_classifier = SklearnClassifier(LogisticRegression(solver="liblinear"))

LogisticRegression_classifier = LogisticRegression_classifier.train(training_set)

print("LogisticRegression_classifier Accuracy: {:.2f}%".format(nltk.classify.accuracy(LogisticRegression_classifier, testing_set)*100))

SGDClassifier_classifier = SklearnClassifier(SGDClassifier())

SGDClassifier_classifier = SGDClassifier_classifier.train(training_set)

print("SGDClassifier_classifier Accuracy: {:.2f}%".format(nltk.classify.accuracy(SGDClassifier_classifier, testing_set)*100))

# Very Inaccurate (< 50%)

SVC_classifier = SklearnClassifier(SVC(gamma="auto"))

SVC_classifier = SVC_classifier.train(training_set)

print("SVC_classifier Accuracy: {:.2f}%".format(nltk.classify.accuracy(SVC_classifier, testing_set)*100))

LinearSVC_classifier = SklearnClassifier(LinearSVC())

LinearSVC_classifier = LinearSVC_classifier.train(training_set)

print("LinearSVC_classifier Accuracy: {:.2f}%".format(nltk.classify.accuracy(LinearSVC_classifier, testing_set)*100))

NuSVC_classifier = SklearnClassifier(NuSVC(gamma="auto"))

NuSVC_classifier = NuSVC_classifier.train(training_set)

print("NuSVC_classifier Accuracy: {:.2f}%".format(nltk.classify.accuracy(NuSVC_classifier, testing_set)*100))

Voting!

import nltk

import random

from nltk.corpus import movie_reviews

import pickle

from sklearn.naive_bayes import MultinomialNB, GaussianNB, BernoulliNB

from sklearn.linear_model import LogisticRegression, SGDClassifier

from sklearn.svm import SVC, LinearSVC, NuSVC

from nltk.classify.scikitlearn import SklearnClassifier

from nltk.classify import ClassifierI

from statistics import mode

class VoteClassifier(ClassifierI):

def __init__(self, *classifiers):

self._classifiers = classifiers

def classify(self, features):

votes = []

for c in self._classifiers:

v = c.classify(features)

votes.append(v)

return mode(votes)

def confidence(self, features):

votes = []

for c in self._classifiers:

v = c.classify(features)

votes.append(v)

choice_votes = votes.count(mode(votes))

conf = choice_votes / len(votes)

return conf

# One Liner

documents = [(list(movie_reviews.words(fileid)), category)

for category in movie_reviews.categories()

for fileid in movie_reviews.fileids(category)]

random.shuffle(documents)

all_words = []

for w in movie_reviews.words():

all_words.append(w.lower()) # Convert to lowercase

all_words = nltk.FreqDist(all_words) # NLTK Frequency Distribution

word_features = list(all_words.keys())[:3000] # Top 3,000 words

def find_features(document):

words = set(document)

features = {}

for w in word_features:

features[w] = (w in words) # Boolean

return features

# print(find_features(movie_reviews.words("neg/cv000_29416.txt")))

featuresets = [(find_features(rev), category) for (rev, category) in documents]

training_set = featuresets[:1900] # First 1900

testing_set = featuresets[1900:] # Post 1900

# Naive Bayes Algorithm

# Posterior = Prior Occurances x Likelihood / Evidence

classifier = nltk.NaiveBayesClassifier.train(training_set)

# Open Pickle Classifier

classifier_f = open("naivebayes.pickle", "rb")

classifier = pickle.load(classifier_f)

classifier_f.close()

print("ORIGINAL Naive Bayes Accuracy: {:.2f}%".format((nltk.classify.accuracy(classifier, testing_set))*100))

classifier.show_most_informative_features(15)

# Word Ratios (Found more in pos or neg)

MNB_classifier = SklearnClassifier(MultinomialNB()) # Wrapper converts to NLTK classifier

MNB_classifier = MNB_classifier.train(training_set)

print("MNB_classifier Accuracy: {:.2f}%".format(nltk.classify.accuracy(MNB_classifier, testing_set)*100))

BernoulliNB_classifier = SklearnClassifier(BernoulliNB())

BernoulliNB_classifier = BernoulliNB_classifier.train(training_set)

print("BernoulliNB_classifier Accuracy: {:.2f}%".format(nltk.classify.accuracy(BernoulliNB_classifier, testing_set)*100))

LogisticRegression_classifier = SklearnClassifier(LogisticRegression(solver="liblinear"))

LogisticRegression_classifier = LogisticRegression_classifier.train(training_set)

print("LogisticRegression_classifier Accuracy: {:.2f}%".format(nltk.classify.accuracy(LogisticRegression_classifier, testing_set)*100))

# Stoicastic Gradient Descent

SGDClassifier_classifier = SklearnClassifier(SGDClassifier())

SGDClassifier_classifier = SGDClassifier_classifier.train(training_set)

print("SGDClassifier_classifier Accuracy: {:.2f}%".format(nltk.classify.accuracy(SGDClassifier_classifier, testing_set)*100))

# Very Inaccurate (< 50%)

# SVC_classifier = SklearnClassifier(SVC(gamma="auto"))

# SVC_classifier = SVC_classifier.train(training_set)

# print("SVC_classifier Accuracy: {:.2f}%".format(nltk.classify.accuracy(SVC_classifier, testing_set)*100))

LinearSVC_classifier = SklearnClassifier(LinearSVC())

LinearSVC_classifier = LinearSVC_classifier.train(training_set)

print("LinearSVC_classifier Accuracy: {:.2f}%".format(nltk.classify.accuracy(LinearSVC_classifier, testing_set)*100))

NuSVC_classifier = SklearnClassifier(NuSVC(gamma="auto"))

NuSVC_classifier = NuSVC_classifier.train(training_set)

print("NuSVC_classifier Accuracy: {:.2f}%".format(nltk.classify.accuracy(NuSVC_classifier, testing_set)*100))

voted_classifier = VoteClassifier(classifier, MNB_classifier, BernoulliNB_classifier, LogisticRegression_classifier, SGDClassifier_classifier, LinearSVC_classifier, NuSVC_classifier)

print("voted_classifier Accuracy: {:.2f}%".format(nltk.classify.accuracy(voted_classifier, testing_set)*100))

print("testing_set[0][0] Classification:", voted_classifier.classify(testing_set[0][0]), "Confidence: {:.5f}%".format(voted_classifier.confidence(testing_set[0][0])*100))

print("testing_set[1][0] Classification:", voted_classifier.classify(testing_set[1][0]), "Confidence: {:.5f}%".format(voted_classifier.confidence(testing_set[1][0])*100))

print("testing_set[2][0] Classification:", voted_classifier.classify(testing_set[2][0]), "Confidence: {:.5f}%".format(voted_classifier.confidence(testing_set[2][0])*100))

print("testing_set[3][0] Classification:", voted_classifier.classify(testing_set[3][0]), "Confidence: {:.5f}%".format(voted_classifier.confidence(testing_set[3][0])*100))

print("testing_set[4][0] Classification:", voted_classifier.classify(testing_set[4][0]), "Confidence: {:.5f}%".format(voted_classifier.confidence(testing_set[4][0])*100))

print("testing_set[5][0] Classification:", voted_classifier.classify(testing_set[5][0]), "Confidence: {:.5f}%".format(voted_classifier.confidence(testing_set[5][0])*100))

Sentiment Analysis

What is sentiment analysis?

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Morbi nec metus justo. Aliquam erat volutpat.

Sentiment Analysis

#File: sentiment_mod.py

import nltk

import random

# from nltk.corpus import movie_reviews

import pickle

from sklearn.naive_bayes import MultinomialNB, GaussianNB, BernoulliNB

from sklearn.linear_model import LogisticRegression, SGDClassifier

from sklearn.svm import SVC, LinearSVC, NuSVC

from nltk.classify.scikitlearn import SklearnClassifier

from nltk.classify import ClassifierI

from statistics import mode

from nltk.tokenize import word_tokenize

def load_pickle(filename):

classifier_f = open("pickle/"+filename, "rb")

classifier = pickle.load(classifier_f)

classifier_f.close()

return classifier

def save_pickle(classifier, filename):

save_classifier = open("pickle/"+filename, "wb")

pickle.dump(classifier, save_classifier)

save_classifier.close()

class VoteClassifier(ClassifierI):

def __init__(self, *classifiers):

self._classifiers = classifiers

def classify(self, features):

votes = []

for c in self._classifiers:

v = c.classify(features)

votes.append(v)

return mode(votes)

def confidence(self, features):

votes = []

for c in self._classifiers:

v = c.classify(features)

votes.append(v)

choice_votes = votes.count(mode(votes))

conf = choice_votes / len(votes)

return conf

def find_features(document):

words = word_tokenize(document)

features = {}

for w in word_features:

features[w] = (w in words) # Boolean

return features

documents = load_pickle("documents.pickle")

word_features = load_pickle("word_features_5k.pickle")

# featuresets = [(find_features(rev), category) for (rev, category) in documents]

# save_pickle(featuresets, "featuresets.pickle")

featuresets = load_pickle("featuresets.pickle")

random.shuffle(featuresets)

training_set = featuresets[:10000]

testing_set = featuresets[10000:]

# Naive Bayes Algorithm: Posterior = Prior Occurances x Likelihood / Evidence

classifier = load_pickle("short_reviews.pickle")

MNB_classifier = load_pickle("MNB_classifier.pickle")

# print("MNB_classifier Accuracy: {:.2f}%".format(nltk.classify.accuracy(MNB_classifier, testing_set)*100))

BernoulliNB_classifier = load_pickle("BernoulliNB_classifier.pickle")

# print("BernoulliNB_classifier Accuracy: {:.2f}%".format(nltk.classify.accuracy(BernoulliNB_classifier, testing_set)*100))

LogisticRegression_classifier = load_pickle("LogisticRegression_classifier.pickle")

# print("LogisticRegression_classifier Accuracy: {:.2f}%".format(nltk.classify.accuracy(LogisticRegression_classifier, testing_set)*100))

LinearSVC_classifier = load_pickle("LinearSVC_classifier.pickle")

# print("LinearSVC_classifier Accuracy: {:.2f}%".format(nltk.classify.accuracy(LinearSVC_classifier, testing_set)*100))

voted_classifier = VoteClassifier(

classifier,

MNB_classifier,

BernoulliNB_classifier,

LogisticRegression_classifier,

LinearSVC_classifier,

)

print("Vote Classifier Accuracy: {:.2f}%".format(nltk.classify.accuracy(voted_classifier, testing_set)*100))

def sentiment(text):

feats = find_features(text)

return voted_classifier.classify(feats),voted_classifier.confidence(feats)

import sentiment_mod as s

# Positive

print(s.sentiment("This movie was awesome! The acting was great, plot was wonderful, and there were pythons...so yea!"))

# Negative

print(s.sentiment("This movie was utter junk. There were absolutely 0 pythons. I don't see what the point was at all. Horrible movie, 0/10"))

Twitter Sentiment Analysis

from tweepy import Stream

from tweepy import OAuthHandler

from tweepy.streaming import StreamListener

import json

import sentiment_mod as s

#consumer key, consumer secret, access token, access secret

#ckey, csecret, akey, asecret

from secret import *

class listener(StreamListener):

def on_data(self, data):

all_data = json.loads(data)

tweet = all_data["text"]

sentiment_value, confidence = s.sentiment(tweet)

print(tweet, sentiment_value, confidence)

if confidence*100 >= 95:

output = open("twitter-out.txt", "a")

output.write(sentiment_value)

output.write('\n')

output.close()

return True

def on_error(self, status):

print(status)

auth = OAuthHandler(ckey, csecret)

auth.set_access_token(atoken, asecret)

open("twitter-out.txt", "w").close() # Clear file

twitterStream = Stream(auth, listener())

word = input("Keyword(s) to track: ")

twitterStream.filter(track=[word])Graphing Twitter Sentiment

import matplotlib.pyplot as plt

import matplotlib.animation as animation

from matplotlib import style

import time

style.use("ggplot")

fig = plt.figure()

ax1 = fig.add_subplot(1,1,1)

fig.canvas.set_window_title("Live Twitter Sentiment")

def animate(i):

pullData = open("twitter-out.txt","r").read()

lines = pullData.split('\n')

xar = []

yar = []

x = 0

y = 0

for l in lines[-200:]:

x += 1 # Move x along

if "pos" in l:

y += 1

elif "neg" in l:

y -= 1

xar.append(x)

yar.append(y)

ax1.clear()

ax1.plot(xar,yar)

ani = animation.FuncAnimation(fig, animate, interval=1000)

plt.show()www.nltk.org

Fin

tdhc.uk/nltk-talk

Adapted from: pythonprogramming.net

Using: NLTK (nltk.org)

Photos/Gradients: Unsplash

Music: youtu.be/YPyyvmnT1ng